Key Takeaways

- MARS is an innovative strategy for improving reward modeling in AI.

- It focuses on ambiguous preference pairs to enhance training efficiency.

- The framework shows empirical gains over traditional augmentation methods.

Quick Summary

Reward modeling is crucial in aligning artificial intelligence systems, particularly in techniques like Reinforcement Learning from Human Feedback (RLHF) and Reinforcement Learning from AI Feedback (RLAIF). These methods depend on effectively optimizing policies, which guide AI behavior. However, the success of reward models relies on human-labeled preference data, which is often limited and expensive to obtain. This has led researchers to explore data augmentation strategies to improve training efficiency.

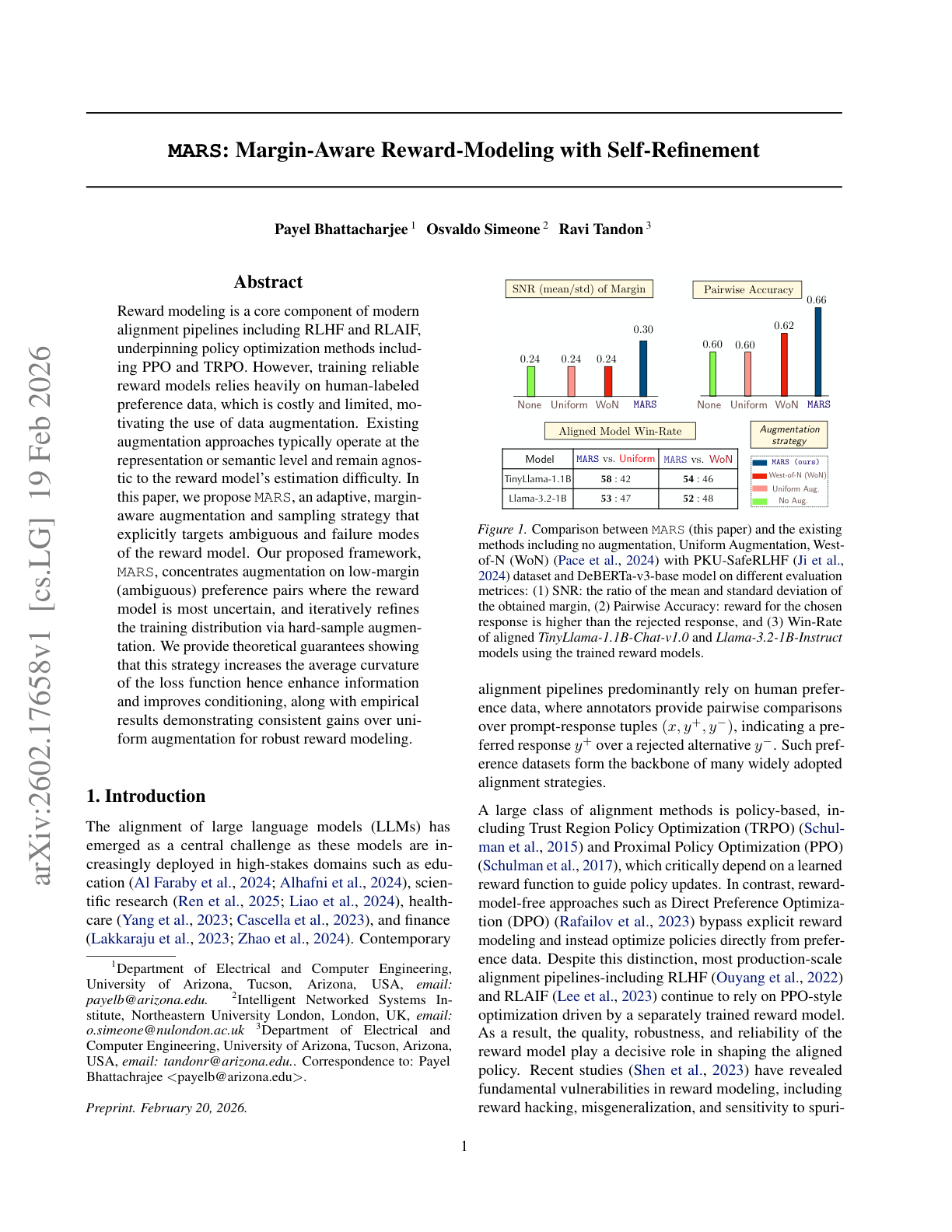

Traditional augmentation methods typically modify data at a semantic level without addressing the specific challenges faced by reward models. This can lead to ineffective training, as these methods do not consider where the model struggles most. To tackle this issue, researchers have introduced MARS, a new adaptive, margin-aware strategy that focuses on enhancing the training of reward models.

MARS specifically targets low-margin preference pairs—situations where the reward model’s predictions are most uncertain. By concentrating on these ambiguous cases, MARS refines the training process through hard-sample augmentation, which emphasizes areas where the model needs the most improvement. This approach is designed to increase the average curvature of the loss function, which can enhance the model’s information processing and overall performance.

Theoretical guarantees provided in the research suggest that MARS effectively improves the conditioning of the reward model. Furthermore, empirical results indicate that MARS outperforms uniform augmentation methods, leading to more robust and reliable reward modeling. This advancement could significantly impact the development of AI systems, making them more aligned with human preferences and behaviors.

In summary, the introduction of MARS represents a promising shift in reward modeling strategies, focusing on the most challenging aspects of model training. This could lead to more effective AI systems that better understand and respond to human preferences.

Disclaimer: I am not the author of this great research! Please refer to the original publication here: https://arxiv.org/pdf/2602.17658v1