Key Takeaways

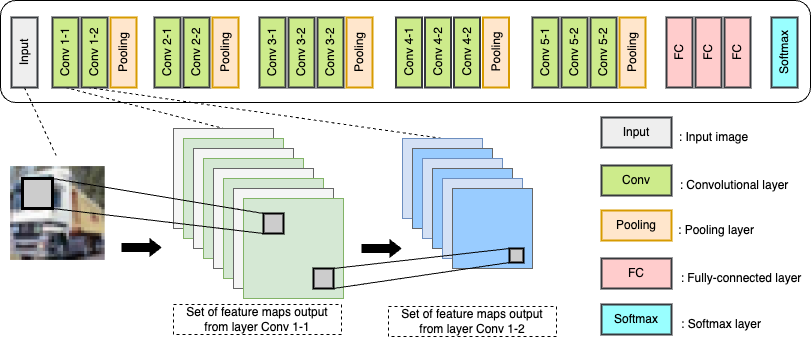

- Efficient CNN Deployment: A new filter-pruning approach reduces convolutional neural network (CNN) sizes by over a third, maintaining high accuracy.

- Conditional Mutual Information (CMI): The method ranks feature importance using CMI, identifying and retaining only the most informative layers.

- Minimal Accuracy Loss: Tested on VGG16 with CIFAR-10, the model’s size reduction led to just a 0.32% accuracy drop.

Quick Summary

Convolutional neural networks (CNNs) are powerful tools for image classification but are often too large for resource-constrained hardware. Researchers have developed a pruning method using Conditional Mutual Information (CMI) to measure and rank the importance of features across network layers. This strategy enables selective removal of less critical components while preserving essential information. The approach supports parallel pruning in forward and backward directions, dramatically reducing model size while maintaining performance. Applied to the VGG16 architecture and CIFAR-10 dataset, the technique decreased filters by over 33% with minimal impact on accuracy. This advancement makes CNNs more practical for deployment on devices with limited resources.

Disclaimer: I am not the author of this great research! Please refer to the original publication here: https://arxiv.org/pdf/2411.18578.pdf