Key Takeaways

- Introduction of Helvipad Dataset: A comprehensive dataset comprising 40,000 frames from diverse environments, captured using dual 360° cameras and LiDAR, providing accurate depth and disparity labels.

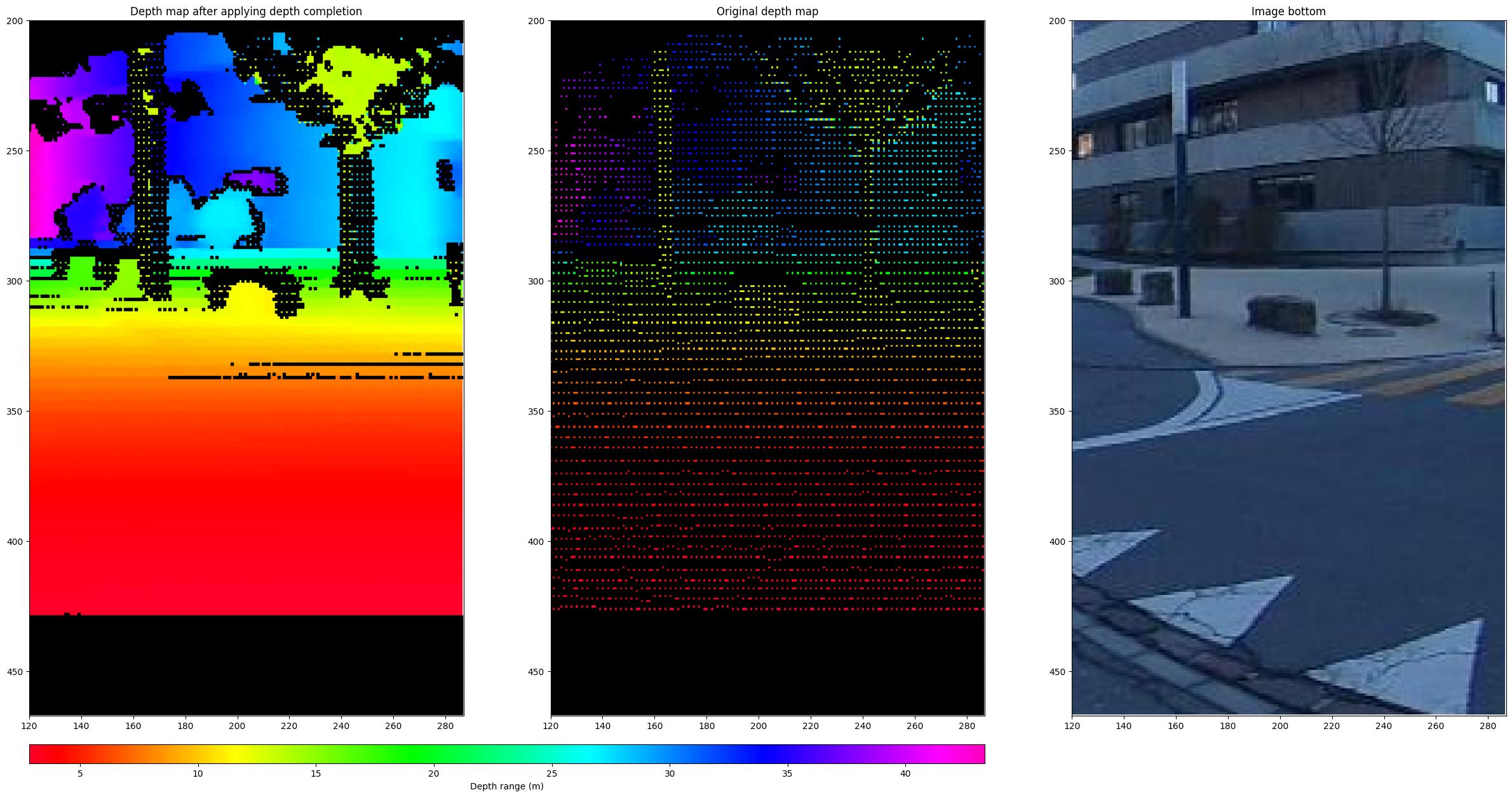

- Augmented Training Data: Enhanced label density through depth completion techniques, improving the effectiveness of model training.

- Benchmarking and Model Adaptations: Evaluation of leading stereo depth estimation models on omnidirectional images, with proposed adaptations leading to improved performance in 360° imaging scenarios.

Quick Summary

Omnidirectional imaging, which captures a full 360-degree view of a scene, has been underexplored in stereo depth estimation due to a lack of suitable data. The Helvipad dataset addresses this gap by offering 40,000 frames from various indoor and outdoor scenes, captured with a top-bottom 360° camera setup and LiDAR sensors. Accurate depth and disparity labels are generated by projecting 3D point clouds onto equirectangular images—a method that maps a spherical image onto a flat surface. To enhance the dataset, depth completion techniques are employed, increasing label density and thereby improving the training process for depth estimation models.

Benchmarking existing stereo depth estimation models revealed that while recent methods perform adequately, challenges persist in achieving accurate depth estimation for omnidirectional data. To address this, researchers introduced specific adaptations to stereo models, resulting in improved depth estimation performance for 360° imaging. This advancement holds potential for applications in virtual reality, robotics, and autonomous systems, where understanding the depth of the surrounding environment is crucial.

Disclaimer: I am not the author of this great research! Please refer to the original publication here: https://arxiv.org/pdf/2411.18335.pdf