Key Takeaways

- Introduction of Buffer Anytime: A novel framework that estimates depth and normal maps from video without requiring paired training data.

- Leveraging Single-Image Priors: Utilizes existing single-image estimation models combined with temporal consistency constraints to improve video analysis.

- Improved Temporal Consistency: Achieves stable and accurate geometric information across video frames, comparable to models trained on large-scale datasets.

Quick Summary

Estimating depth and surface normals—geometric properties that describe the distance and orientation of surfaces—from video is crucial for applications like 3D reconstruction and autonomous navigation. Traditional methods often depend on extensive datasets where each video frame is annotated with corresponding depth and normal information, a process that is both time-consuming and resource-intensive.

Addressing this challenge, researchers have developed Buffer Anytime, a framework that eliminates the need for such paired training data. Instead, it leverages single-image priors—pre-existing models trained to estimate depth and normals from individual images—and enforces temporal consistency across video frames. Temporal consistency ensures that the estimated geometric properties remain stable and coherent throughout the video sequence.

Buffer Anytime employs a lightweight temporal attention architecture, which focuses on relevant information across frames, and integrates optical flow—a technique that captures the motion of objects between frames—to maintain smooth transitions. This combination allows the framework to produce high-quality depth and normal maps from video without relying on large annotated datasets.

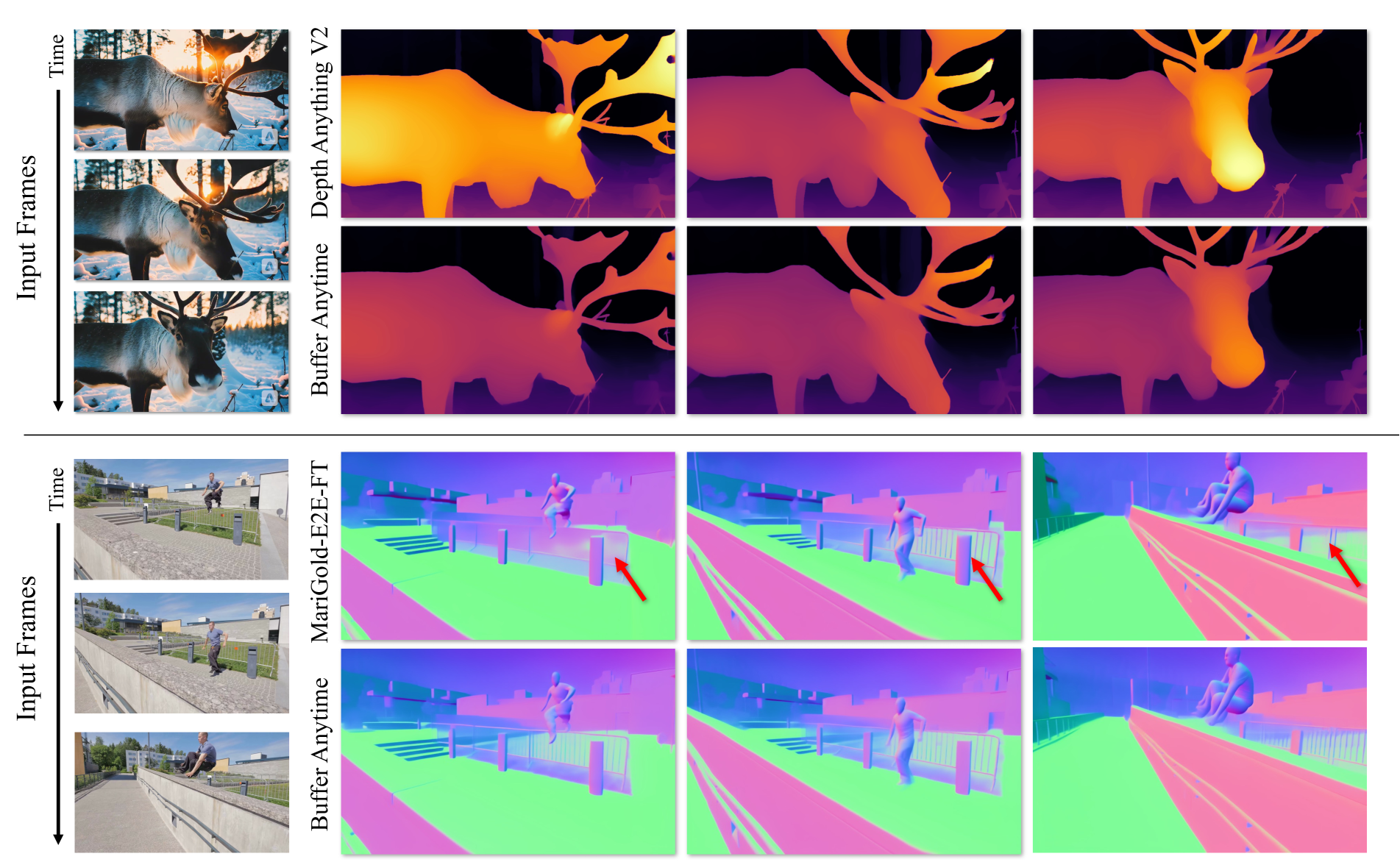

When applied to leading single-image models like Depth Anything V2 and Marigold-E2E-FT, Buffer Anytime significantly enhances temporal consistency while preserving accuracy. Experiments demonstrate that this approach not only surpasses traditional image-based methods but also achieves results comparable to state-of-the-art video models trained on extensive paired datasets.

By reducing the dependency on large-scale annotated data, Buffer Anytime offers a more efficient pathway for developing robust video analysis tools, potentially accelerating advancements in fields that require precise 3D understanding from video input.

Disclaimer: I am not the author of this great research! Please refer to the original publication here: https://arxiv.org/pdf/2411.17249.pdf